Significance test

calculations in modern reports

Significance test

calculations in modern reports

Learn about the significant tests the application runs to identify significant results in your reports.

When you apply significance testing to a report, the appropriate significance test is applied to each selected question in the report based on the question type:

| Field type | Two-tailed t-test | Two-tailed z-test |

|---|---|---|

| Single Choice question |

|

|

| Single Choice Grid question |

|

|

| Multiple Choice question |

|

|

| Multiple Choice Grid question |

|

|

| Number question |

|

Both tests are two-tailed, meaning that they test for significance in positive and negative directions. In a two-tailed test, the significance level is divided by two, with each half forming a range on either edge of a normal distribution.

The significance level (α) is calculated by subtracting the confidence level from 100%. For example, for a 95% confidence level, the significance level is 100% - 95% = 5% = 0.05. For the two-tailed test, the 0.05 significance level is divided by two and a range of 0.025 is formed on either edge of the normal distribution.

Confidence level and p-values

You choose the confidence level before you begin your analysis. You can choose from the available confidence levels (99%, 95%, 90%, 80%, and 65%) when you configure significance testing for a report.

If you specify a 95% confidence level, it means that if you repeated your survey 100 times with different participants, each confidence interval has a 95% chance of repeating the result. For example:

- If you are studying income, people with a higher education level are more likely to be at a higher income level.

- If you are studying proportion, one group of people will be more likely than another to hold a certain preference.

The confidence level tells you how confident you can be that your estimated range (confidence interval) contains the true value that exists in the entire population you are surveying.

Choosing a lower confidence level may result in more significant differences being identified, but it can also lead to more false positives occurring in the differences identified.

The p-value, the probability of obtaining your results, or more surprising results, is compared to the significance level (α) to determine statistical significance.

- If the p-value <= α, you can conclude that the results are statistically significant.

- If the p-value > α, you can conclude that the results are not statistically significant.

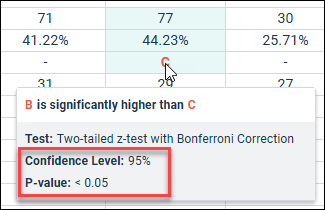

When you hover over a letter in the crosstab report, where at least one significant difference has been found, the information displayed in the pop-up includes:

- The confidence level

selected when the significance test ran.

The significance level can be derived from this value by subtracting the confidence level from 100%.

- The p-value calculated by

the test.

Note: For p-values less than 0.05, < 0.05 is displayed instead of the exact value.

Two-tailed z-test with Bonferroni Correction

The z-test is used to determine whether there is a significant difference between two sample groups by comparing the proportions (0.6 vs. 0.4) of the two groups, which can also be expressed as percentages (60% vs. 40%).

The z-test is used to perform significance testing on the following question types:

- Single Choice

- Single Choice Grid

- Multiple Choice

- Multiple Choice Grid

The Bonferroni correction adjusts the significance level when several statistical tests are being performed simultaneously. This helps to prevent false positives that become more likely as the number of tests increases. Multiple significance tests in a study are known as a family. The family-wise error rate (FWER) is the probability of finding at least one false positive among all of the tests in the family. The Bonferroni correction adjusts your significance level to account for the additional tests so that the FWER is reduced to the original significance level for your report based on the selected confidence level.

The formula for the Bonferroni correction is:

α(adjusted) = α(original) / n

- α is the significance level

- n is the number of comparisons

| Example: Adjusting the significance level for 4 banners | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

You are comparing banners A, B, C, and D with a 95% confidence level, and a significance level of 0.05. This means that there are 6 comparisons required to compare each banner with all of the others:

The Bonferroni-adjusted significance level is 0.05/6 = 0.0083. This means that instead of considering p-values < 0.05 significant, you only consider p-values < 0.0083 statistically significant. The p-value is a measure of the probability of obtaining your result, or even more surprising results, under the assumption that there is no real difference between the groups being compared. It is calculated from the sample data, and it ranges from 0 to 1. Note: If we calculate the FWER using the adjusted significance level we

find that the combined significance level for the family of 6 tests is close to

the original 0.05 significance level. The formula is

1 - (1 - α)^n where n is the number of tests.

The FWER for the 6 tests using the Bonferroni-adjusted

significance level is:

If your results were as follows:

Using the Bonferroni correction, only two comparisons are statistically significant:

Without the Bonferroni correction, five of the comparisons (all except A vs. D) would have been considered statistically significant, potentially leading to false conclusions. |

Two-tailed t-test

The t-test is used to determine whether there is a significant difference between the means of two sample groups. It is used to perform significance testing on Number questions.

For example, the t-test can be used to assess differences in people's estimated monthly budget for a given category.

The minimum sample size for each banner being tested is 30.