Apply significance tests to

a modern report

Apply significance tests to

a modern report

Analyze report data to identify statistically significant differences.

- Open the modern report.

- The report must include at least one banner.

- Each banner column must have a minimum of 30 responses to be included in significance tests.

-

In the report toolbar, select

Statistics > Significance

Testing.

Significance testing is turned on by default next to the Pairwise Column Test heading. You can turn off the toggle if you later choose not to include significance testing in your report.

-

Select the checkbox next to each question to apply significance

testing to.

Significance testing will be applied in each of the crosstabs for the selected questions. You can select from any of the following supported question types that are included in your report:

- Single Choice

- Single Choice Grid

- Multiple Choice

- Multiple Choice Grid

- Rating

- Rank Order

- Allocation

- Number

You can also apply significance testing to Group Answers and Group Numbers recode fields added as tiles to your reports.

Note: Profile variables cannot currently be selected for significance testing. -

If you want to compare each individual banner column to the total

for the banner, select the

Include comparisons of each column in the banner with the

"Total" column checkbox.

Use this option to identify significant differences between one banner column and the combination of all banner columns.

Use caution when selecting this option. If there is significant overlap between the Total column and the comparison column, it can lead to skewed or inaccurate results. This occurs because the Total column aggregates all responses, including those in the individual columns being compared. The results of these tests against the Total column might not be as reliable or meaningful as comparisons between truly independent groups.

-

To change the confidence level, select one of the available

options (99%,

95%,

90%,

80%,

65%) from the

Confidence Level drop-down list.

The default confidence level is 95%.

The higher the confidence level, the more discerning the results will be. The lower the confidence level, the more statistically significant data points you will see, but with less of a chance of those being repeatable.

-

To change the minimum sample size value, select the

Minimum Sample Size checkbox, and enter an

integer value greater than or equal to 30 and less than or equal to 9,999.

The default minimum sample size is 30 responses. The text box will not accept values outside the supported range.

The minimum sample size applies to each banner column being tested, not the banner question as a whole. For example, if you add a Gender banner with Male and Female banner columns, each of these columns must have at least 30 responses, under the default setting, for significance testing to be applied to both columns. If the Male column only had 20 responses, the significance test calculations for the column would not be run or displayed, and N/A is displayed in the cell instead.

Increasing the minimum sample size can increase statistical power, improving the ability to detect true effects and reducing false negative errors. It also improves the precision and reliability of results.

-

Select

Apply & Exit.

The appropriate significance tests will be run for each of the questions selected.

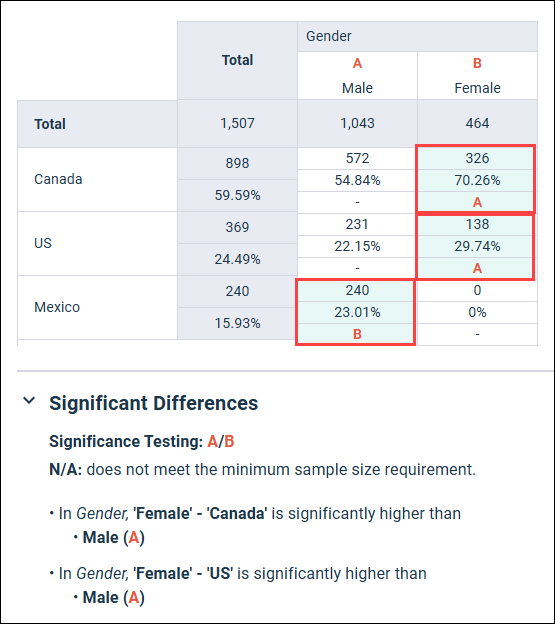

- Any significant results identified will be displayed in the crosstab. The cells that are significantly higher than one or more other cells are highlighted in green.

- The significant results are also described in the Significant Differences panel below the crosstab.

- If the tests resulted in no significant results being identified, a "No significant differences found." message is displayed in the Significant Differences panel.

For information on significance test results, see Examining significance test results.