AI Follow-Up (beta)

Collect better qualitative feedback from participants by enabling AI Follow-Up for Open End questions.

Open-ended responses can vary wildly in quality from one survey participant to another. Good responses are long and detailed, with lots of rich nuance and invaluable qualitative insights. They're high-quality answers where you can tell that the survey participant has taken the time to respond to the question thoughtfully and thoroughly.

- Brief, surface-level answers with insufficient detail.

- Nonsensical or off-topic answers that don't address the question being asked.

- Unintelligible responses (for example, someone typing random keystrokes to get past a required Open End question).

- Rushed responses from speeders and bad actors.

Enabling AI Follow-Up can improve overall response quality. This feature asks questions based on the initial Open End question and participants' answers, prompting participants to dig deeper into aspects of their answers and provide more details. The result is a higher quality response with more insightful qualitative feedback.

How AI Follow-Up works

- To use this feature, you must enable AI features in the Alida platform. For more information, see Enable AI and Machine Learning.

- This feature is available in modern surveys only.

- This feature is available in English, French, and Spanish.

- Short Answer

- Long Answer

When you are authoring the question, ensure the question appears on its own page, and then select Enable AI Generated Follow-up Questions.

- Translations are supported.

- You can test this feature through survey preview and in test activity links.

- Once the survey is live, you cannot change the Enable AI Generated Follow-up Questions setting.

Authoring effective Open End questions

AI Follow-Up will reference the initial Open End question, and the responses from participants, in formulating follow-up prompts. For this reason, it's important to have a strong opening question. Keep these tips in mind when you are authoring the question text:

-

Be clear and specific

Vague questions yield vague answers, making it difficult for both the participant and AI Follow-Up to grasp the core intent. Clearly define the information you are seeking. This specificity provides an anchor for AI Follow-Up to understand the context of the initial response.

Example Vague question:

What do you think about our service?

Clearer question:

What specific aspects of our customer service did you find most helpful or unhelpful during your recent interaction?

-

Focus on one dimension

Avoid asking double-barreled questions. This allows participants to provide more focused answers and enables AI Follow-Up to identify which part of the question a participant might have overlooked or answered incompletely.

Example: How satisfied are you with the product's features and ease of use?

This is actually two separate questions:

How satisfied are you with the product's features ?

How satisfied are you with the product's ease of use?

-

Use neutral and unbiased language

Leading questions or those with emotionally charged words can influence responses and skew the data. Strive for neutrality to encourage genuine and unfiltered feedback. This ensures the initial response is a true reflection of the participant's views, giving AI Follow-Up a more accurate starting point.

Example Biased language:

Don't you agree that our new interface is much better?

More neutral language:

What are your thoughts on the new interface compared to the previous one?

-

Encourage elaboration and examples

Frame questions in a way that prompts detailed answers rather than simple "yes" or "no" responses or short phrases. Start questions with "How," "What," or "Why." Consider using phrases like "Could you tell me more about...?" or "Can you provide an example of...?"

Example "Describe your experience with our onboarding process. What, if anything, stood out to you?" encourages a more narrative response, offering richer context for AI Follow-Up.

-

Define the scope when necessary

If the question is too broad, participants might not know where to begin. A little direction helps channel the participant's thinking and provides AI Follow-Up with a more focused area for potential follow-ups.

Example Less defined scope:

What are your suggestions for improvement?

More defined scope:

What specific suggestions do you have for improving the checkout process on our website?

-

State the purpose to add clarity

Sometimes, briefly explaining why you're asking a particular question can help participants provide more relevant and thoughtful answers. This context can guide the participant and provide a clearer foundation for AI Follow-Up.

Example To help us understand how to better support our users, could you describe any challenges you faced while using [specific feature]?

Considerations and limitations

- Not every Open End question needs AI Follow-Up enabled. Some Open End questions are actually more close-ended in nature. For example, if you ask participants "List all the dog food brands you have ever purchased," this would not warrant enabling AI Follow-Up.

- Performance limitations. Generating follow-up questions can result in a large server load. The performance of this feature depends on how many participants are completing the survey, and how many surveys in-field have AI Follow-Up enabled, at any given moment. If the server load is exceeded, participants are not shown any follow-up questions and simply proceed to the next page in the survey.

- Effect on survey length. If you have lots of Open End questions in your survey and you enable AI Follow-Up on many or all of them, that can extend your survey length significantly as each Open End question essentially becomes multiple Open End questions. Take this into consideration when you are informing participants of the estimated time for completion in invitations or welcome messages.

Responding experience

Participants respond to the Open End question as they would normally. If the answer is poor quality (for example, off-topic, vague, too short, or lacking in detail), AI Follow-Up is triggered.

When they click Next, they see another Open End question instead of the next survey page. The question that appears is generated by AI Follow-Up using the initial Open End question and the participant's response. The participant responds to the second Open End question and clicks Next to proceed to the next survey page.

In cases where participants provide good responses or performance limitations prevent AI Follow-Up from loading, AI Follow-Up is not triggered. Participants answer the initial Open End question and proceed to the next survey page. They do not see the prompts at all.

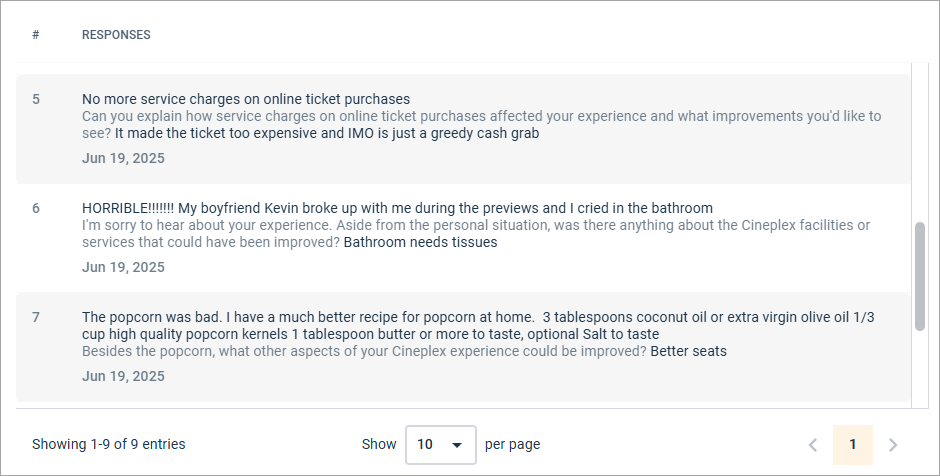

How follow-up responses are displayed in modern reporting

In modern reporting, the participants' responses appear next to the AI Follow-Up questions. The questions appear in-line in light grey text, while the responses appear in darker text.

The same formatting is used in modern report exports.