Significance testing in

modern reports

Significance testing in

modern reports

When you use surveys to identify and understand differences between groups of people or responses, you can apply significance testing to assess the reliability of your findings.

Significance testing verifies that the results are not attributable to random chance, and can be extrapolated to the target population with a certain level of confidence. Significance tests help you to determine whether the differences observed in your data are real and representative of the entire target population, and are unlikely to have occurred by chance.

In modern reports, significance tests:

- Are run in pairs. If your

report has three banner columns (e.g. A, B, and C), separate significance tests

are run on each of the following pairs to test all comparisons:

- A vs. B

- A vs. C

- B vs. C

- Are run against the same data the crosstab is displaying, including any filters that are applied.

- "Did not answer" responses are always excluded from significance testing calculations, regardless of the Include "Did Not Answer" setting, to ensure accurate results. Filters are automatically added and applied to the report to exclude any "Did not answer" responses from each crosstab that significance testing is being run on.

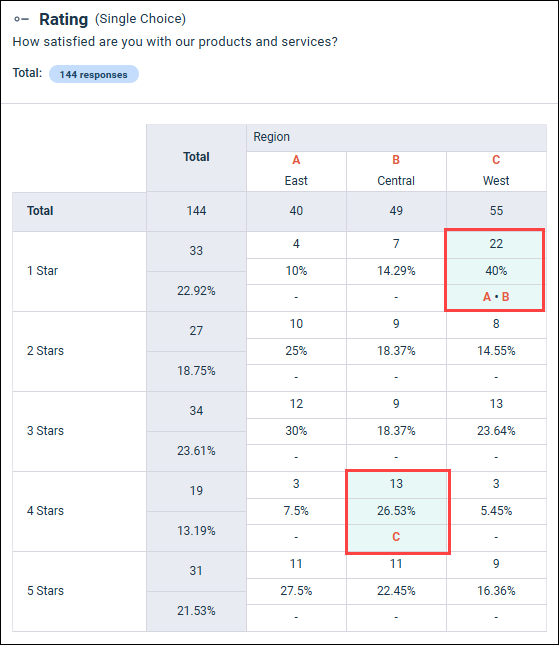

Example: Customer satisfaction by region

You surveyed customer satisfaction across three different regions: East, Central, and West. The survey asks customers to rate their overall satisfaction with the company's products and services on a scale of 1 (very dissatisfied) to 5 (very satisfied). The goal is to determine if there are any significant differences in satisfaction levels across these regions, which may indicate that there are region-specific issues impacting customer satisfaction.

You use the following workflow to conduct significance testing:

- Collect data

You use a survey to gather data from a sample of customers in each of the three regions. Each response includes:

- Region: A Single Choice question to record the region the respondent lives in (East, Central, or West).

- Rating: A 5 star Rating question to record the respondents' overall satisfaction level from 1 star (very dissatisfied) to 5 stars (very satisfied).

A minimum of 30 responses is required for each region included in the significance tests.

- Conduct significance

testing

You complete the following steps to set up and run significance tests for your customer satisfaction survey:

- Create a new modern report in your survey.

- Add the Region question as a banner.

- Select Statistics > Significance Testing.

- Select the

Rating question to apply significance testing to, and

click

Apply & Exit.

Use the default values for Confidence Level (95%) and Minimum Sample Size (30).

The application automatically determines the type of significance test to run based on the question type and runs paired tests for each possible banner combination. The Region question is a Single Choice question, so the z-test is applied to test the differences in proportions between each banner combination for significant differences.

- Interpret your

results

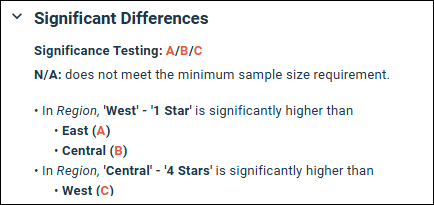

In the crosstab, two cells are highlighted in light green indicating that significant differences have been identified.

The results indicate that:

- In the top row, the value in the "West (C) - 1 star" cell is significantly higher than the values in both the "East (A) - 1 star" and "Central (B) - 1 star" cells.

- In the fourth row, the value of the "Central (B) - 4 stars" cell is significantly higher than the value in the "West (C) - 4 stars" cell.

The differences in the cells highlighted in the crosstab are also summarized in the Significant Differences panel. For each entry, the row and banner of the significant finding are highlighted followed by a bulleted list of banner(s) the highlighted cell is significantly higher than.

Weighting and significance testing

You can combine weighting and significance testing in your report. Weighting enables you to ensure that your data reflects the target population, and significance testing enables you to identify significant differences between groups within the target population.

When you run significance tests on weighted reports, the tests are run on the effective base, the distribution of responses for each group after weighting has been applied. If you want to run tests on the unweighted base, the original distribution of responses to your survey, you must first remove the weighting.

For more information on weighting, see Weighting in modern reports.