Distributing an unmoderated usability test

Learn tips and best practices for distributing your unmoderated usability test.

Do not distribute the activity to lots (i.e. hundreds or thousands) of participants. Aim for approximately 5-20 responses from pre-screened participants who you know will provide thoughtful feedback.

For example, if you know that you get a 10% response rate from your community and you want to have 10 good recordings at the very end, send an invitation to 100 members with the goal of getting 10 responses. You could also consider batching your distribution into groups of 25 and distributing one invitation at a time.

Here are some other tips for distributing your activity:

- Remember that usability testing may be a new experience for participants who are used to completing surveys. You may need to include more explanations in the invitation or welcome text about what the process is like and what they can expect.

- Talking points you may want

to cover in the invitation or welcome message include:

- The estimated length of the survey.

- Reminding participants to ensure they have enough time to do the activity (ideally without interruption).

- Reminding participants to think out loud.

- Reminding participants to be on desktop (not mobile).

- Gentle encouragement like "There are no wrong answers," "Have fun," or "Please remember that we're assessing the usability of the product, not you!"

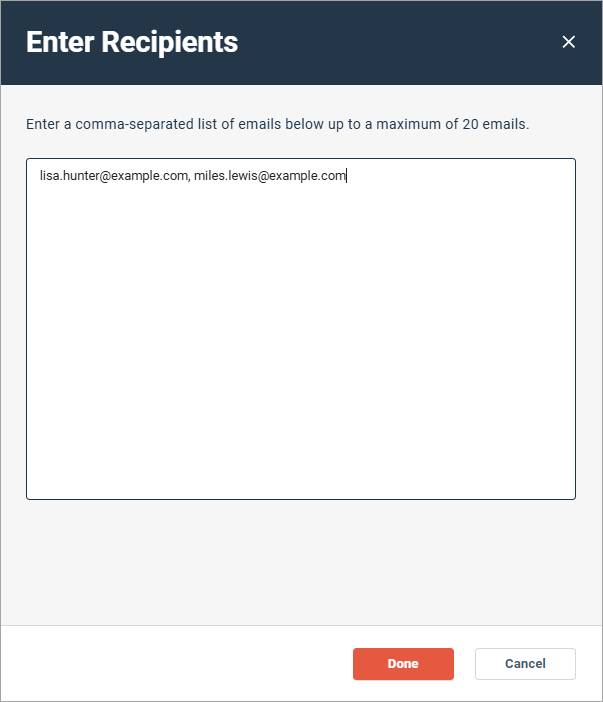

- If you know exactly which

recipients you want to reach, use the

Enter recipients email functionality when you're

adding recipients to an invitation.

- Alternately, after you have

created or edited a member filter, click the

Select Participants tab to select participants

individually. You can use the

Select Participants tab if your initial member

filter yields fewer than 500 members, and you can select up to 50 members.

Note: The Select Participants tab is only visible if you are an admin or a user with the Can access sensitive data permission enabled.

Note: The Select Participants tab is only visible if you are an admin or a user with the Can access sensitive data permission enabled. - Do a soft launch before a

full launch. Identify any potential issues with your activity or messaging and

fix them.

The pool of usability testing participants is a small subset of your community. Out of that pool, the number of participants for one study could be even smaller. You don't want to "waste" your precious limited number of potential completes on a flawed study.

Do a soft launch to get 1-2 responses first. Or better yet, test your activity with internal stakeholders or colleagues who aren't familiar with the usability workflow you're testing.

- Offer an incentive.

Usability tests are more time-intensive than typical surveys, and incentives are a nice way to motivate participants and thank them for their participation. If you do use incentives though, we recommend:

- Not sending the incentive to participants automatically upon completion. Incentives for these types of activities are typically higher than for standard surveys, and you don't want a participant who completed the activity poorly (for example, incomprehensible speech or audio, speeding through the activity) to automatically get an award for an unusable response. Review the responses first, and then distribute the incentive rewards to participants who put in the effort.

- Clearly communicating to participants when they can expect to receive their incentive, or whether it will be offered for high-quality responses only.

For more information about incentive options in the Alida platform, see Incentives.

After you distribute an unmoderated usability test and results start to come in, the next step is reporting on unmoderated usability test results.